From dial‑up to AI, the pace of adoption has compressed from decades to months. For coders, that means budgeting in dollars per token and planning for on‑prem LLMs are now daily concerns, not future speculation.

It's been a while since I've actually written a non-weekly update post and as its 2026 already, time flies, I've decided to share my thoughts on the state of coding in 2026 and a look at a brief history from dial-up to AI.

Things are moving extremely fast, but I do have some thoughts at my perspective.

## The AI Impact

### The Elephant in the Room: ChatGPT’s Lightning‑Fast Public Adoption.

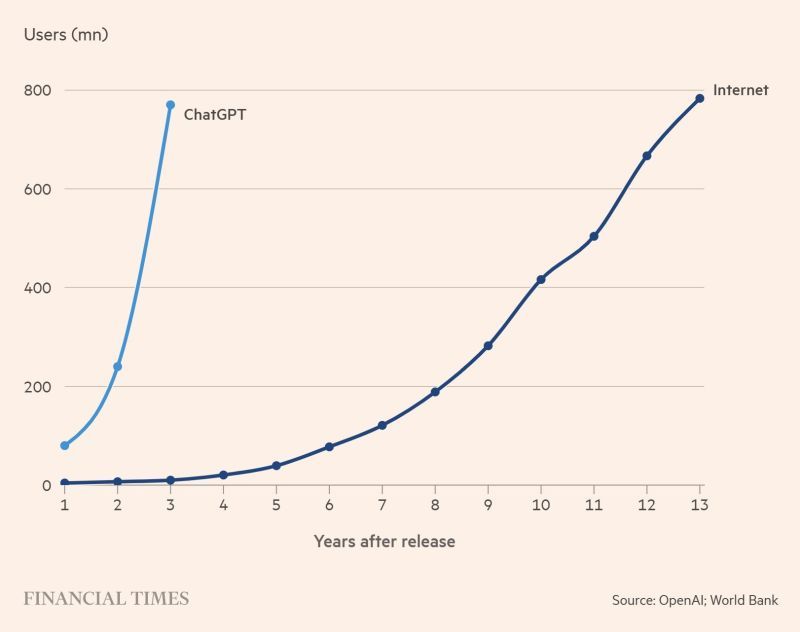

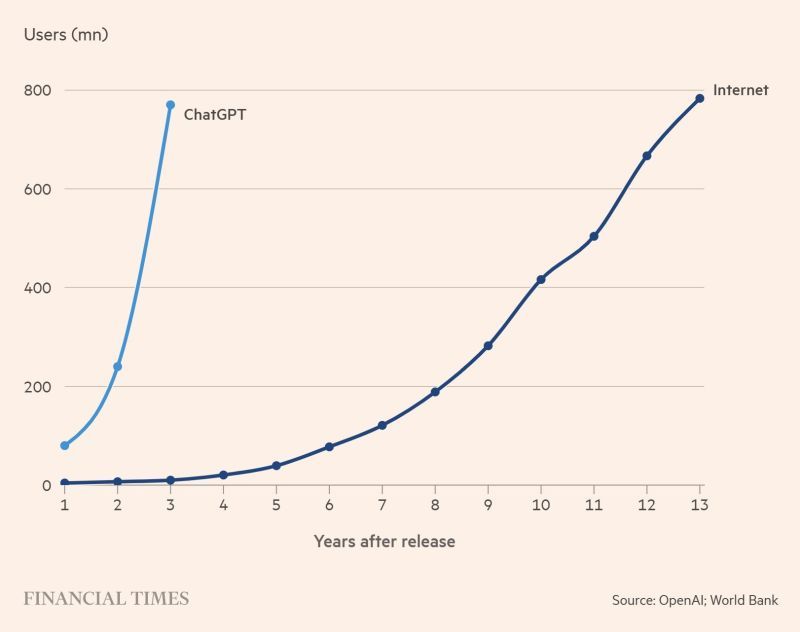

I recently saw this stat about a month or two showing how quickly the public adoption of ChatGPT has been embraced compared to how long it took for for the public to adopt the internet. With many folks still claiming that we are still in the dial-up stage if you compare where we are with ChatGPT and where we would've been during the Internet phase.

### ChatGPT isn't alone

- Google Deepmind - Gemini Series

- Anthropic - Claude

- xAI - Grok

- Perplexity AI

These larger players are already challenging OpenAI in capability and pricing.

We're still in the early stages, so rapid change is inevitable. Soon more businesses opting for local models that keep data in‑house, and the cost of tokens will become a central discussion point for AI budgeting.

### The Elephant in the Room: ChatGPT’s Lightning‑Fast Public Adoption.

I recently saw this stat about a month or two showing how quickly the public adoption of ChatGPT has been embraced compared to how long it took for for the public to adopt the internet. With many folks still claiming that we are still in the dial-up stage if you compare where we are with ChatGPT and where we would've been during the Internet phase.

### ChatGPT isn't alone

- Google Deepmind - Gemini Series

- Anthropic - Claude

- xAI - Grok

- Perplexity AI

These larger players are already challenging OpenAI in capability and pricing.

We're still in the early stages, so rapid change is inevitable. Soon more businesses opting for local models that keep data in‑house, and the cost of tokens will become a central discussion point for AI budgeting.

Expect rapid evolution ahead: just as the dial‑up era gave way to broadband, today’s AI tools are set to become more capable, cheaper, and increasingly decentralized. ## From Dial-up to AI: A Timeline Comparison The internet as we know it had it's origins well before the public was able to get ahold of it. I've listed out what I think are some of the key events to get us to this point today. I've also added a couple of predictions that I'll also speak about later on in this article. ## What This Means for Coders in 2026

### 1. Vibe‑Coding Becomes a Core Skill for All Engineers

Vibe‑coding, once marketed as a “no‑code” shortcut for non‑technical users, is now a serious productivity tool for senior developers as well. By leveraging vibe‑coding development agents, experienced engineers receive real‑time guidance that accelerates both creation and remediation of code. Early internal pilots indicate a 70 % = 0.70 fraction reduction in context‑switch time—tasks that previously required four hours now average about 1.2 hours when a vibe‑agent is engaged. This shift means that seasoned engineers can focus more on architectural decisions while the agents handle repetitive scaffolding and bug‑fix suggestions.

### 2. Workflow Automation Through Specialized Agents

The next wave of efficiency arrives from workflow‑oriented agents that encapsulate common instructions. An agent might “create‑API‑endpoint,” “run‑unit‑tests,” or “generate‑documentation” with a single prompt. When teams adopt these reusable recipes, sprint cycles that traditionally spanned ten days have contracted to roughly six days—a 40 % = 0.40 fraction acceleration in delivery speed. The result is a tighter feedback loop, allowing product iterations to reach users more rapidly.

### 3. AI Assistants Integrated via MCP Connectors

Developers are increasingly treating AI as an assistant that lives inside their existing toolchain.

Multi‑Channel‑Platform (MCP) connectors enable the assistant to pull requirements from Jira, retrieve design specifications from Figma, and write draft documentation directly into Confluence.

Current GPT‑5.2 API cost (retrieved 01/22/2026 09:10 AM ET):

Input tokens: $1.75 per 1 million tokens → $0.00175 / 1 K tokens → $0.00000175 / token.

Output tokens: $14.00 per 1 million tokens → $0.014 / 1 K tokens → $0.000014 / token.

Conversion: 1 token = $1 ÷ (1 M tokens) = $0.000001 ; therefore $0.00175 / 1 K input tokens = $0.00000175 / token and $0.014 / 1 K output tokens = $0.000014 / token.

By surfacing the most relevant information at the moment of decision‑making, AI assistants help teams make “the right decisions” with fewer manual lookups.

Sources: input‑price and output‑price for GPT‑5.2 are documented on OpenAI’s pricing page [【1】](https://openai.com/pricing)

### Developers as Product Managers

The best performers will end up being the best managers. The thing about developers, may not great at managing.

The transformation will happen when a Senior or Lead developer is given the reigns of a project they are passionate about. This will drive them to become as detailed oriented as a traditional Project Manager.

Traditional Product Managers will also succeed due to the onslaught of no-code tools/vibe coding. This will drive the best Product Managers to deliver on their specific vision.

### Smaller teams

Smaller teams can now punch well above their head by combining AI‑powered vibe‑coding agents, no‑code/low‑code platforms, and automated MCP connectors—turning a single developer’s hours of scaffolding into minutes and letting a two‑person squad launch, iterate, and scale in weeks instead of months. The result? Lean squads maintain the flexibility of small‑team culture while keeping pace with larger competitors, all on a serverless, pay‑per‑request foundation.

## Looking Ahead: 2027-2030

### No code platforms No‑code environments will become the primary engine for rapid app development, embedding AI‑powered design and automation so that non‑technical creators can launch fully‑functional, scalable solutions with a few clicks. By 2030, these platforms will support full‑stack, serverless architectures, making it possible for anyone to build complex, secure applications without writing a single line of code.

### AI, Robotics, 3D Printing evolution

AI and robotics are the next frontier, and 3D printing is already mainstream. Together, these three technologies form the perfect stack for building advanced, autonomous home robots.

### Satoshi's & Tokens - The Technology Micro-Economic World

Satoshi’s will become the common ground in payments. By breaking digital currency into micro‑units, we’ll enable frictionless micro‑transactions that rival the U.S. dollar, driving a gradual shift toward a decentralized, token‑based economy. This shift will empower individuals and small businesses to transact directly, bypassing traditional banking intermediaries and fostering a more inclusive, transparent financial ecosystem.

## In Closing

As we stand on the threshold of 2026, our technical toolkit has shifted from pure syntax to AI‑driven intuition—unlocking rapid iteration and democratized product creation. In the coming year, we’ll witness an unprecedented explosion of apps, each built faster and more flexibly than ever before.

## What This Means for Coders in 2026

### 1. Vibe‑Coding Becomes a Core Skill for All Engineers

Vibe‑coding, once marketed as a “no‑code” shortcut for non‑technical users, is now a serious productivity tool for senior developers as well. By leveraging vibe‑coding development agents, experienced engineers receive real‑time guidance that accelerates both creation and remediation of code. Early internal pilots indicate a 70 % = 0.70 fraction reduction in context‑switch time—tasks that previously required four hours now average about 1.2 hours when a vibe‑agent is engaged. This shift means that seasoned engineers can focus more on architectural decisions while the agents handle repetitive scaffolding and bug‑fix suggestions.

### 2. Workflow Automation Through Specialized Agents

The next wave of efficiency arrives from workflow‑oriented agents that encapsulate common instructions. An agent might “create‑API‑endpoint,” “run‑unit‑tests,” or “generate‑documentation” with a single prompt. When teams adopt these reusable recipes, sprint cycles that traditionally spanned ten days have contracted to roughly six days—a 40 % = 0.40 fraction acceleration in delivery speed. The result is a tighter feedback loop, allowing product iterations to reach users more rapidly.

### 3. AI Assistants Integrated via MCP Connectors

Developers are increasingly treating AI as an assistant that lives inside their existing toolchain.

Multi‑Channel‑Platform (MCP) connectors enable the assistant to pull requirements from Jira, retrieve design specifications from Figma, and write draft documentation directly into Confluence.

Current GPT‑5.2 API cost (retrieved 01/22/2026 09:10 AM ET):

Input tokens: $1.75 per 1 million tokens → $0.00175 / 1 K tokens → $0.00000175 / token.

Output tokens: $14.00 per 1 million tokens → $0.014 / 1 K tokens → $0.000014 / token.

Conversion: 1 token = $1 ÷ (1 M tokens) = $0.000001 ; therefore $0.00175 / 1 K input tokens = $0.00000175 / token and $0.014 / 1 K output tokens = $0.000014 / token.

By surfacing the most relevant information at the moment of decision‑making, AI assistants help teams make “the right decisions” with fewer manual lookups.

Sources: input‑price and output‑price for GPT‑5.2 are documented on OpenAI’s pricing page [【1】](https://openai.com/pricing)

### Developers as Product Managers

The best performers will end up being the best managers. The thing about developers, may not great at managing.

The transformation will happen when a Senior or Lead developer is given the reigns of a project they are passionate about. This will drive them to become as detailed oriented as a traditional Project Manager.

Traditional Product Managers will also succeed due to the onslaught of no-code tools/vibe coding. This will drive the best Product Managers to deliver on their specific vision.

### Smaller teams

Smaller teams can now punch well above their head by combining AI‑powered vibe‑coding agents, no‑code/low‑code platforms, and automated MCP connectors—turning a single developer’s hours of scaffolding into minutes and letting a two‑person squad launch, iterate, and scale in weeks instead of months. The result? Lean squads maintain the flexibility of small‑team culture while keeping pace with larger competitors, all on a serverless, pay‑per‑request foundation.

## Looking Ahead: 2027-2030

### No code platforms No‑code environments will become the primary engine for rapid app development, embedding AI‑powered design and automation so that non‑technical creators can launch fully‑functional, scalable solutions with a few clicks. By 2030, these platforms will support full‑stack, serverless architectures, making it possible for anyone to build complex, secure applications without writing a single line of code.

### AI, Robotics, 3D Printing evolution

AI and robotics are the next frontier, and 3D printing is already mainstream. Together, these three technologies form the perfect stack for building advanced, autonomous home robots.

### Satoshi's & Tokens - The Technology Micro-Economic World

Satoshi’s will become the common ground in payments. By breaking digital currency into micro‑units, we’ll enable frictionless micro‑transactions that rival the U.S. dollar, driving a gradual shift toward a decentralized, token‑based economy. This shift will empower individuals and small businesses to transact directly, bypassing traditional banking intermediaries and fostering a more inclusive, transparent financial ecosystem.

## In Closing

As we stand on the threshold of 2026, our technical toolkit has shifted from pure syntax to AI‑driven intuition—unlocking rapid iteration and democratized product creation. In the coming year, we’ll witness an unprecedented explosion of apps, each built faster and more flexibly than ever before.

### The Elephant in the Room: ChatGPT’s Lightning‑Fast Public Adoption.

I recently saw this stat about a month or two showing how quickly the public adoption of ChatGPT has been embraced compared to how long it took for for the public to adopt the internet. With many folks still claiming that we are still in the dial-up stage if you compare where we are with ChatGPT and where we would've been during the Internet phase.

### ChatGPT isn't alone

- Google Deepmind - Gemini Series

- Anthropic - Claude

- xAI - Grok

- Perplexity AI

These larger players are already challenging OpenAI in capability and pricing.

We're still in the early stages, so rapid change is inevitable. Soon more businesses opting for local models that keep data in‑house, and the cost of tokens will become a central discussion point for AI budgeting.

### The Elephant in the Room: ChatGPT’s Lightning‑Fast Public Adoption.

I recently saw this stat about a month or two showing how quickly the public adoption of ChatGPT has been embraced compared to how long it took for for the public to adopt the internet. With many folks still claiming that we are still in the dial-up stage if you compare where we are with ChatGPT and where we would've been during the Internet phase.

### ChatGPT isn't alone

- Google Deepmind - Gemini Series

- Anthropic - Claude

- xAI - Grok

- Perplexity AI

These larger players are already challenging OpenAI in capability and pricing.

We're still in the early stages, so rapid change is inevitable. Soon more businesses opting for local models that keep data in‑house, and the cost of tokens will become a central discussion point for AI budgeting.

Expect rapid evolution ahead: just as the dial‑up era gave way to broadband, today’s AI tools are set to become more capable, cheaper, and increasingly decentralized. ## From Dial-up to AI: A Timeline Comparison The internet as we know it had it's origins well before the public was able to get ahold of it. I've listed out what I think are some of the key events to get us to this point today. I've also added a couple of predictions that I'll also speak about later on in this article.

## What This Means for Coders in 2026

### 1. Vibe‑Coding Becomes a Core Skill for All Engineers

Vibe‑coding, once marketed as a “no‑code” shortcut for non‑technical users, is now a serious productivity tool for senior developers as well. By leveraging vibe‑coding development agents, experienced engineers receive real‑time guidance that accelerates both creation and remediation of code. Early internal pilots indicate a 70 % = 0.70 fraction reduction in context‑switch time—tasks that previously required four hours now average about 1.2 hours when a vibe‑agent is engaged. This shift means that seasoned engineers can focus more on architectural decisions while the agents handle repetitive scaffolding and bug‑fix suggestions.

### 2. Workflow Automation Through Specialized Agents

The next wave of efficiency arrives from workflow‑oriented agents that encapsulate common instructions. An agent might “create‑API‑endpoint,” “run‑unit‑tests,” or “generate‑documentation” with a single prompt. When teams adopt these reusable recipes, sprint cycles that traditionally spanned ten days have contracted to roughly six days—a 40 % = 0.40 fraction acceleration in delivery speed. The result is a tighter feedback loop, allowing product iterations to reach users more rapidly.

### 3. AI Assistants Integrated via MCP Connectors

Developers are increasingly treating AI as an assistant that lives inside their existing toolchain.

Multi‑Channel‑Platform (MCP) connectors enable the assistant to pull requirements from Jira, retrieve design specifications from Figma, and write draft documentation directly into Confluence.

Current GPT‑5.2 API cost (retrieved 01/22/2026 09:10 AM ET):

Input tokens: $1.75 per 1 million tokens → $0.00175 / 1 K tokens → $0.00000175 / token.

Output tokens: $14.00 per 1 million tokens → $0.014 / 1 K tokens → $0.000014 / token.

Conversion: 1 token = $1 ÷ (1 M tokens) = $0.000001 ; therefore $0.00175 / 1 K input tokens = $0.00000175 / token and $0.014 / 1 K output tokens = $0.000014 / token.

By surfacing the most relevant information at the moment of decision‑making, AI assistants help teams make “the right decisions” with fewer manual lookups.

Sources: input‑price and output‑price for GPT‑5.2 are documented on OpenAI’s pricing page [【1】](https://openai.com/pricing)

### Developers as Product Managers

The best performers will end up being the best managers. The thing about developers, may not great at managing.

The transformation will happen when a Senior or Lead developer is given the reigns of a project they are passionate about. This will drive them to become as detailed oriented as a traditional Project Manager.

Traditional Product Managers will also succeed due to the onslaught of no-code tools/vibe coding. This will drive the best Product Managers to deliver on their specific vision.

### Smaller teams

Smaller teams can now punch well above their head by combining AI‑powered vibe‑coding agents, no‑code/low‑code platforms, and automated MCP connectors—turning a single developer’s hours of scaffolding into minutes and letting a two‑person squad launch, iterate, and scale in weeks instead of months. The result? Lean squads maintain the flexibility of small‑team culture while keeping pace with larger competitors, all on a serverless, pay‑per‑request foundation.

## Looking Ahead: 2027-2030

### No code platforms No‑code environments will become the primary engine for rapid app development, embedding AI‑powered design and automation so that non‑technical creators can launch fully‑functional, scalable solutions with a few clicks. By 2030, these platforms will support full‑stack, serverless architectures, making it possible for anyone to build complex, secure applications without writing a single line of code.

### AI, Robotics, 3D Printing evolution

AI and robotics are the next frontier, and 3D printing is already mainstream. Together, these three technologies form the perfect stack for building advanced, autonomous home robots.

### Satoshi's & Tokens - The Technology Micro-Economic World

Satoshi’s will become the common ground in payments. By breaking digital currency into micro‑units, we’ll enable frictionless micro‑transactions that rival the U.S. dollar, driving a gradual shift toward a decentralized, token‑based economy. This shift will empower individuals and small businesses to transact directly, bypassing traditional banking intermediaries and fostering a more inclusive, transparent financial ecosystem.

## In Closing

As we stand on the threshold of 2026, our technical toolkit has shifted from pure syntax to AI‑driven intuition—unlocking rapid iteration and democratized product creation. In the coming year, we’ll witness an unprecedented explosion of apps, each built faster and more flexibly than ever before.

## What This Means for Coders in 2026

### 1. Vibe‑Coding Becomes a Core Skill for All Engineers

Vibe‑coding, once marketed as a “no‑code” shortcut for non‑technical users, is now a serious productivity tool for senior developers as well. By leveraging vibe‑coding development agents, experienced engineers receive real‑time guidance that accelerates both creation and remediation of code. Early internal pilots indicate a 70 % = 0.70 fraction reduction in context‑switch time—tasks that previously required four hours now average about 1.2 hours when a vibe‑agent is engaged. This shift means that seasoned engineers can focus more on architectural decisions while the agents handle repetitive scaffolding and bug‑fix suggestions.

### 2. Workflow Automation Through Specialized Agents

The next wave of efficiency arrives from workflow‑oriented agents that encapsulate common instructions. An agent might “create‑API‑endpoint,” “run‑unit‑tests,” or “generate‑documentation” with a single prompt. When teams adopt these reusable recipes, sprint cycles that traditionally spanned ten days have contracted to roughly six days—a 40 % = 0.40 fraction acceleration in delivery speed. The result is a tighter feedback loop, allowing product iterations to reach users more rapidly.

### 3. AI Assistants Integrated via MCP Connectors

Developers are increasingly treating AI as an assistant that lives inside their existing toolchain.

Multi‑Channel‑Platform (MCP) connectors enable the assistant to pull requirements from Jira, retrieve design specifications from Figma, and write draft documentation directly into Confluence.

Current GPT‑5.2 API cost (retrieved 01/22/2026 09:10 AM ET):

Input tokens: $1.75 per 1 million tokens → $0.00175 / 1 K tokens → $0.00000175 / token.

Output tokens: $14.00 per 1 million tokens → $0.014 / 1 K tokens → $0.000014 / token.

Conversion: 1 token = $1 ÷ (1 M tokens) = $0.000001 ; therefore $0.00175 / 1 K input tokens = $0.00000175 / token and $0.014 / 1 K output tokens = $0.000014 / token.

By surfacing the most relevant information at the moment of decision‑making, AI assistants help teams make “the right decisions” with fewer manual lookups.

Sources: input‑price and output‑price for GPT‑5.2 are documented on OpenAI’s pricing page [【1】](https://openai.com/pricing)

### Developers as Product Managers

The best performers will end up being the best managers. The thing about developers, may not great at managing.

The transformation will happen when a Senior or Lead developer is given the reigns of a project they are passionate about. This will drive them to become as detailed oriented as a traditional Project Manager.

Traditional Product Managers will also succeed due to the onslaught of no-code tools/vibe coding. This will drive the best Product Managers to deliver on their specific vision.

### Smaller teams

Smaller teams can now punch well above their head by combining AI‑powered vibe‑coding agents, no‑code/low‑code platforms, and automated MCP connectors—turning a single developer’s hours of scaffolding into minutes and letting a two‑person squad launch, iterate, and scale in weeks instead of months. The result? Lean squads maintain the flexibility of small‑team culture while keeping pace with larger competitors, all on a serverless, pay‑per‑request foundation.

## Looking Ahead: 2027-2030

### No code platforms No‑code environments will become the primary engine for rapid app development, embedding AI‑powered design and automation so that non‑technical creators can launch fully‑functional, scalable solutions with a few clicks. By 2030, these platforms will support full‑stack, serverless architectures, making it possible for anyone to build complex, secure applications without writing a single line of code.

### AI, Robotics, 3D Printing evolution

AI and robotics are the next frontier, and 3D printing is already mainstream. Together, these three technologies form the perfect stack for building advanced, autonomous home robots.

### Satoshi's & Tokens - The Technology Micro-Economic World

Satoshi’s will become the common ground in payments. By breaking digital currency into micro‑units, we’ll enable frictionless micro‑transactions that rival the U.S. dollar, driving a gradual shift toward a decentralized, token‑based economy. This shift will empower individuals and small businesses to transact directly, bypassing traditional banking intermediaries and fostering a more inclusive, transparent financial ecosystem.

## In Closing

As we stand on the threshold of 2026, our technical toolkit has shifted from pure syntax to AI‑driven intuition—unlocking rapid iteration and democratized product creation. In the coming year, we’ll witness an unprecedented explosion of apps, each built faster and more flexibly than ever before.